Could you please explain what exactly you did?

That’s what I did:

- “simulate” the model with simult_ with zero shocks for 2000 periods and take the variables at T=2000 as the Stochastic Steady State (SSS)

- Simulate once again the model with simult_ (for T=length IRF) starting from the SSS, hitting the economy with an uncertainty shock at time 1 and zero shocks afterwards

- Calculate percentage deviations from SSS.

Basu and Bundick do the following:

Let T1= burnin

T2= length IRF

- “simulate” the model with simult_ for T=T1+T2 with zero shocks for T1 periods and a 1 std shock to uncertainty at t=T1+1.

- Calculate percentage deviations from SSS (value of the variables at t=T1)

I don’t know what exactly you did, but the outlined approach should be correct and should yield the Basu/Bundick IRFs (apart from a small difference related to them not actually starting at the stochastic steady state, because the burnin is insufficient)

I have replicated their IRFs using my own codes. Your differences are puzzling. Did you correctly deal with percentage deviations? If the model is in logs, you must use log differences instead of actual ratios.

ADDENDUM: What I said before only applies to the case without pruning. With pruning, there is an augmented state space. Basu/Bundick with their long series set yhat1 to yhat3 to the values at the stochastic steady state. However, when you restart the simulations at the stochastic steady state, you set yhat1 to yhat1+yhat2+yhat3 and yhat2=yhat3=0.

Thanks a lot for the addendum, Johannes. It’s been very helpful

Dear Johannes,

Do you know why sometimes I get (non negligible) movements in the level of TFP after an uncertainty shock when I compute the IRFs as Generalized IRFs? I’m using the toolbox by Andreasen et al. so it should not be a problem of not having done enough replications. I really don’t see why this is the case.

I am not that familiar with their toolkit, but it might have to do with the way the process is specified (log-normal vs. in levels)

Dear friends, sorry to follow this old post.

I met a simple problem when studying Basu (2016). They set the s.t.d. of preference shock and that of its volatility shock to around 0.002. Then they set the variance to 1 and report irfs multiplied by 100 to represent one percent std from steady state. Are those irfs true levels or magnified? Could anyone help me?

Thanks a lot! !

How would you estimate the parameters controlling time-variation in volatility?

Sorry, I’ve not finished it yet.

@zhanghuifd Please explain your question in more detail. I have replicated the Basu/Bundick paper.

@onthetopo If you observe the endogenous process, you can e.g. use a particle filter to independently estimate the exogenous process from the rest of the model. In Basu/Bundick, the uncertainty shock is on a preference shock and therefore unobserved. They use a combination of impulse response function and moment matching.

Hi! Sorry to repost it a little late.

I found irfs with respect to level shocks are around ten times larger than to volatility shocks in the form of percent deviation from s.s. in my model.

Also, the magnitudes of irfs and moments of variables under volatility shocks are easily affected. The moments are enlarged. How does it happen? I wonder if I have calculated irfs in the wrong way, or else. I am using Dynare and set the variance to 1 now. A few days ago, with the help of Prof. Pfeifer, I tried to set the size of shock, or the variance, to 0.01. But how to compute moments or IRFs in percent deviations from s.s. in this case? Sorry, I am still confused. Please give me a hand!

Thanks for any help!

Please be more systematic. Which specification of the shock process and the shocks-block are you using and what are the problems you are experiencing. And which options do you use (order, pruning, type of IRFs)

Hi! Sorry! Thanks for your reply!

I’m still confused that the magnitudes of irfs and moments of variables under volatility shocks are easily affected. The moments are enlarged, but respones are still small. How does it happen? So, if I want to set the shock-block like this with anything else unchanged,

shocks;

var ezt = 0.01;

var evolz = 0.01;

end;

Moments are smaller than the former. But, it is reasonable this time after multiplying it by 10. How to compute its irfs and moments correspondingly in this case?

Just now, I happened to find out a weird result about irf attached below.

I just use simple option to do 3rd approximation. Simulated irfs change once I set irf = 20 rather than irf = 16. Thanks for any help!

IRF_SSS&simulated.pdf (8.07 KB)

I have posted replication files with detailed comments for Basu/Bundick (2017) to https://github.com/JohannesPfeifer/DSGE_mod/tree/master/Basu_Bundick_2017

I’m trying to understand (and put into my own words) why we cannot start the simulation from the stochastic steady state which we calculated but have to run a second simulation from the det. steady state and let the shock happen in period burnin+1 (what you describe in “ADDENDUM: …” further above and in the preambel of Basu_Bundick_2017.mod). Is is that when we naively start the simulation at the stochastic steady state Dynare does not know this and applies the uncertainty correction (precautionary behavior) again which let the system transition from the det. to the stoch. steady state in first place? However, this explanation doesn’t have to do anything with pruning, I guess. Hence, I think I’m not getting the point. Maybe you could briefly tell me where the yhat1, yhat2, yhat3 you are mentioning above are coming from?

The problem is the augmented state space that results from pruning. Have a look at Section 3.3 of http://faculty.chicagobooth.edu/workshops/econometrics/PDF%202016/Pruning_April_21_2016.pdf

Dear Professor @jpfeifer, I am modelling a negative shock to the TFP, so similar to your code on Basu and Bundick making sigma_z_bar*eps_z is negative.

My code is:

ln(A) = rho_A* ln(A(-1))- sigma_A*e_A;

If I want to include a time varying volatility component, to model the impact of uncertainty on the TFP, shall sigma_sigma_A*e_sigma_A have a positive or negative?

My equation is:

ln(sigma_A) = (1 - rho_sigma_A)* ln(mean_sigma_A) + rho_sigma_A* ln(sigma_A(-1)) - sigma_sigma_A*e_sigma_A;

Thank you

The sign will not matter as the variance of shocks will both be same. Where sign matters is for the actual shock realization in the simulation.

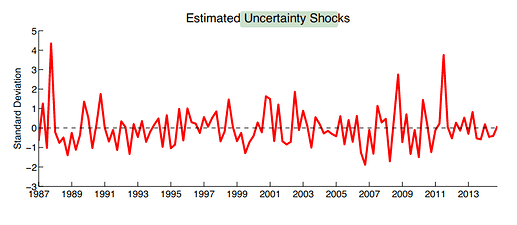

Hi Prof Pfeifer, may I revive this thread? It seems uncertainty shocks (I guess same as time-varying stochastic volatility per the title of this thread) can be used to identify sources of a cluster of small or large volatilities, right? For example, by allowing time-varying volatility for all structural shocks in a given model, one can check the estimated uncertainty shocks for which ones had, say, a lower volatility over the period of interest, i.e., if you are seeking to explain something like sources of the great moderation.

In other words, checking whether bad (good) luck caused a given period of high (low) volatility (with many shocks as candidates) using Basu’s approach is appropriate, right? Like no concerns for identification issues here, I guess.

That isn’t really a question. We did pretty much exactly this in our 2014 JME paper (Policy risk and the business cycle - ScienceDirect)