Hi all,

First I should say that I’m not actually using Dynare to estimate this model. Rather, I am using an MHRW algorithm as detailed here: Ch_4_Bayesian_Estimation_Own_Codes.zip - Google Drive

I’m trying to extend Slobodyan, Wouters 2012 to estimate a model with learning. To check the consistency of my MHRW algorithm, I’ve run two MHRW chains with the same starting point, so far with just over 800,000 draws, and then plotting the kernel densities of each of the estimated parameters. The model builds upon the Smets Wouters '07 model, but incorporates boundedly rational agents.

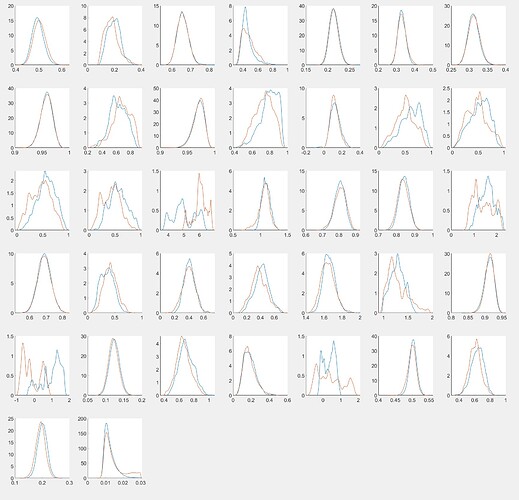

These are the kernel densities of each of the estimated 37 parameters. Each curve is a fitted density for each parameter draw. If both chains have converged to the true posterior, then kernel densities from two chains should be fairly close to one another.

As one can see, for most of the parameters the kernel densities from the two chains are remarkably close. However, the kernel densities for the 17th and 29th parameters are very divergent, with drastically different modes and means.

Is there any way to improve my MHRW algorithm to get more consistent monte carlo chains?

My first guess is perhaps I should use a deterministic block-MH algorithm, which groups the “consistently” estimated parameters in one block and the not-so-consistently estimated parameters in another block. If anyone knows of any sample code that I can look at which does this, that would be grand.

My second guess is that maybe the MHRW proposal density needs to be changed so that the problematic parameters have a larger entry in the covariance matrix of the proposal density, which would force the algorithm to sample from a larger space of possible values for those parameters.